Most agent failures aren’t model failures - they’re memory failures.

Hallucinations, task drift, irrelevant responses, and runaway token costs often stem from one root cause: poorly designed memory architecture.

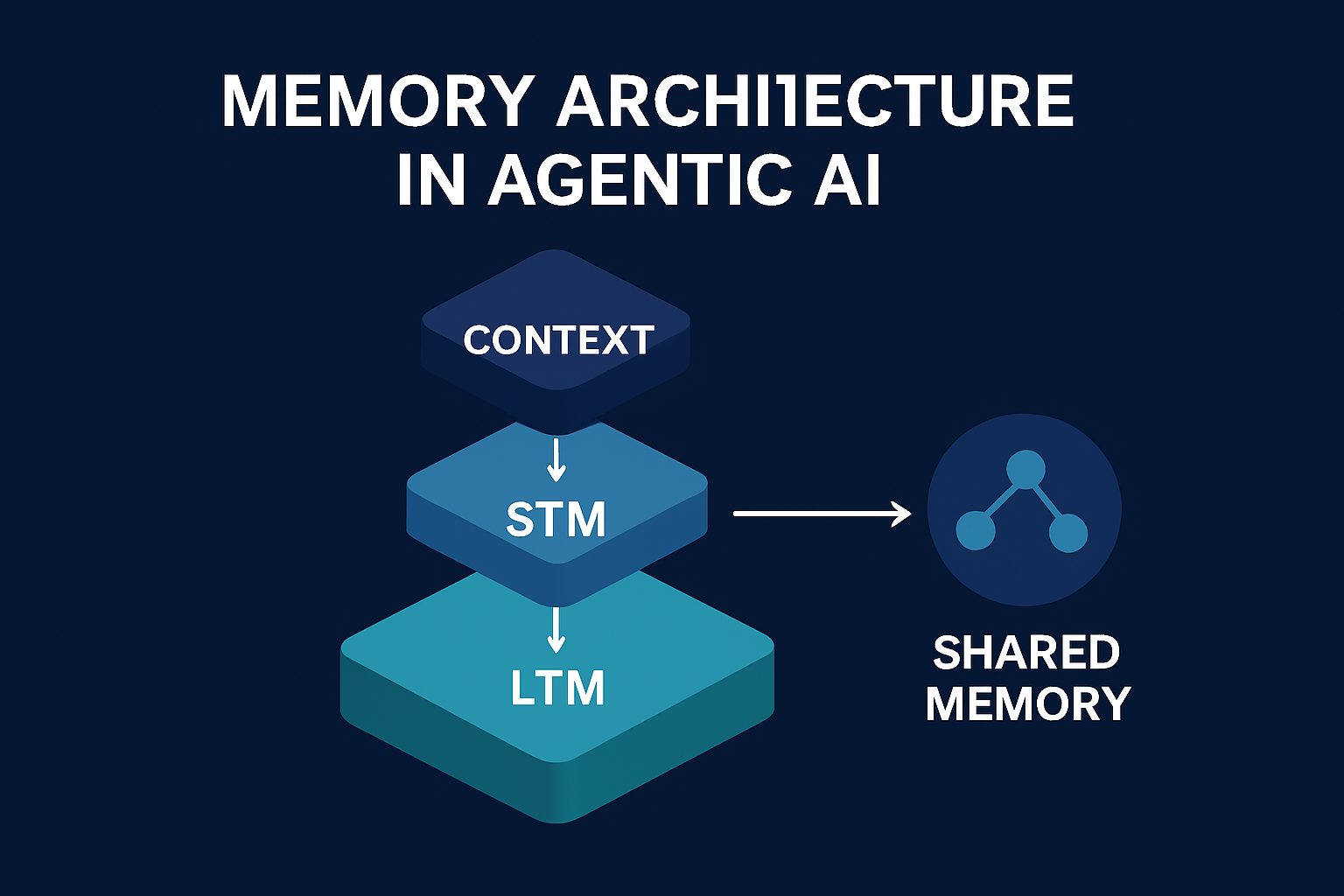

Memory Architecture is a foundational concept of Agentic AI, allowing the systems to retain context, learn from past experiences, and make informed decisions over time.

Take the example of a chatbot. Under the hood, it behaves like a distributed system with volatile memory, persistent stores, retrieval layers, and evolving knowledge bases. Without a memory architecture, this system collapses into stateless RPC calls. This is why a chatbot is not a stateless service call. It’s a miniature distributed system with a memory fabric.

Statefulness, persistence, and relationships are capabilities that are made possible by memory.

Statefulness is about contextual awareness. Agents maintain “working memory” a short term memory (STM) for immediate tasks, holding current goals & conversation flow, often within the context window of LLM or its in-memory buffers. Short-Term Memory isn’t merely the LLM’s context window — it’s the entire working set the agent must shuttle in and out at runtime, constrained by token limits, latency, and cost.

Without STM, the agent treats every query as a fresh prompt — breaking multi-turn reasoning and preventing complex task decomposition.

Persistence is required for memory across sessions. This is enabled by long-term memory (LTM) that can store user preferences, past events, user data etc. in external databases using embeddings.

This LTM enables chat continuity, recalls user details, and allows learning and adaptation over days, weeks, or longer timeframes.

Without LTM, agents forget user intent, re-ask solved questions, and lose alignment — leading to drift and degraded trust.

Relationships in an AI system map to shared state.

This includes user-agent personalization as well as multi-agent collaboration through a shared memory fabric (vector stores, key-value stores, or message buses).

This is the backbone of cooperative agents — the shared memory prevents agents from operating in silos.

Long-term memory can be of the following types:

Semantic memory stores facts, concepts, and rules. It may implement these via knowledge bases, vector embeddings or even a graph database.

Episodic memory records key events and interactions with their temporal dimensions. For example, customer gave negative feedback on chatbot’s guidance a week ago etc.

Procedural memory encodes action-oriented knowledge and learned skills acquired through training or experience.

These aren’t theoretical distinctions — they map to different storage engines, retrieval patterns, and operational costs.

The good news: many of these memory challenges mirror ones architects already solve in traditional systems.

Memory hierarchy and caching : The principle of organizing memory into layers based on speed and cost - CPU cache, RAM, disk - can be extended to AI systems.

The STM of context window, LLM-cache for immediate session and the LTM such as vector storage for knowledge persistence needs to be used judiciously. Architects should optimize data transfer between these tiers using caching for frequently accessed data, and high speed access for most relevant data.

Virtual memory and swapping : Operating systems use the concept of virtual memory to provide a large address space by swapping data between RAM and disk, as needed.

Likewise, AI agents can also create a “virtual context” and swap memories in and out of the limited context window. Architects should optimize for costs by loading relevant knowledge only into the context window, and evicting less relevant information.

Dynamic Allocation and Garbage Collection : Traditional systems manage heap memory dynamically, allocating and deallocating memory at runtime, often using automated garbage collection to reclaim unused space.

AI Architects can similarly implement “memory refresh” or decay functions that prioritize knowledge based on recency, frequency of access, and explicit importance flags, similar to how garbage collectors identify and free unused objects.

Segmentation and Logical Organization : Segmentation in traditional operating systems divide the program’s memory into logical, variable sized chunks (like code, data etc.) for efficient management, security, and sharing.

Likewise, AI Architects can use a modular design where different memory types such as episodic, semantic, procedural etc. are stored and managed using the most appropriate technology (e.g. vector database for semantics, traditional DB for structured facts), and retrieve based on the specific task requirements.

Fragmentation and Compaction : Dynamic memory allocation leads to fragmentation(unusable small gaps of memory) which are resolved via compaction in operating systems. Likewise, AI Architects can use techniques like memory summarization (compression) and periodically consolidate related memory traces to mitigate this, thereby ensuring efficient use of available context window and storage.

So, in summary…

Agentic AI lives or dies by its memory architecture.

Short-term memory drives reasoning; long-term memory drives continuity; shared memory drives collaboration.

Using system design patterns we already know, architects can build agents that stay relevant, aligned, efficient — and actually useful.