Fairness in AI refers to the principle that AI systems should treat all individuals and groups equitably — without bias, discrimination, or unjust outcomes. In practice, this means designing, training, and deploying models that do not systematically disadvantage people based on sensitive attributes like gender, race, or age.

🤔 How Does Bias Creep In?

Bias can infiltrate an AI system at several stages.

Training Data Bias: If your training data doesn’t reflect the full diversity of the population, your model will likely learn and amplify those imbalances.

Example: Early face detection systems failed to recognize non-Caucasian faces accurately because they were trained on datasets lacking representation.

Algorithmic Bias: Some models might unintentionally weigh protected features (like gender or age) more heavily in decision-making — even if those features are not causally related to the outcome.

Evaluation/Test Data Bias: Even after training, biased evaluation data can skew performance results. A model that performs well on a skewed test set might still be unfair in the real world.

🛠️ What Can You Do About It?

Start with a critical question: Is the observed bias reflective of a real-world, relevant difference — or does it encode historical or systemic unfairness?

For example, if a role demands high upper-body strength, selecting younger individuals might be justifiable. But for a software engineering job using AI tools, rejecting older candidates due to age alone would be clearly unfair.

If the bias is unjustified or harmful, here’s how to mitigate it:

- Augment Training Data: Ensure balanced representation across sensitive attribute groups (e.g., gender, ethnicity, age).

- Try Different Models: Some models handle bias better than others. Comparing across algorithms can reveal which ones generalize more equitably.

- Balance Test Data: Just as training data should be representative, ensure that your validation and hold-out sets are not skewed.

- Run Fairness Tests:

Counterfactual Testing- Flip only the sensitive attribute (e.g., Male ↔ Female) and check if predictions change.

Prompt Stress Tests for GenAI- Inject biased prompts and observe responses.

Demographic Generalization Tests- Evaluate performance on underrepresented or regionally different groups. - Deploy Monitoring Hooks: Post-deployment, track whether sensitive features are implicitly influencing decisions.

- Control Feedback Loops: Guard against user interactions that could bias future fine-tuning or adaptive learning.

- Scrutinize Knowledge Bases: For RAG (Retrieval-Augmented Generation), check that embeddings and sources don’t carry encoded bias.

⚙️ Techniques to Mitigate Bias

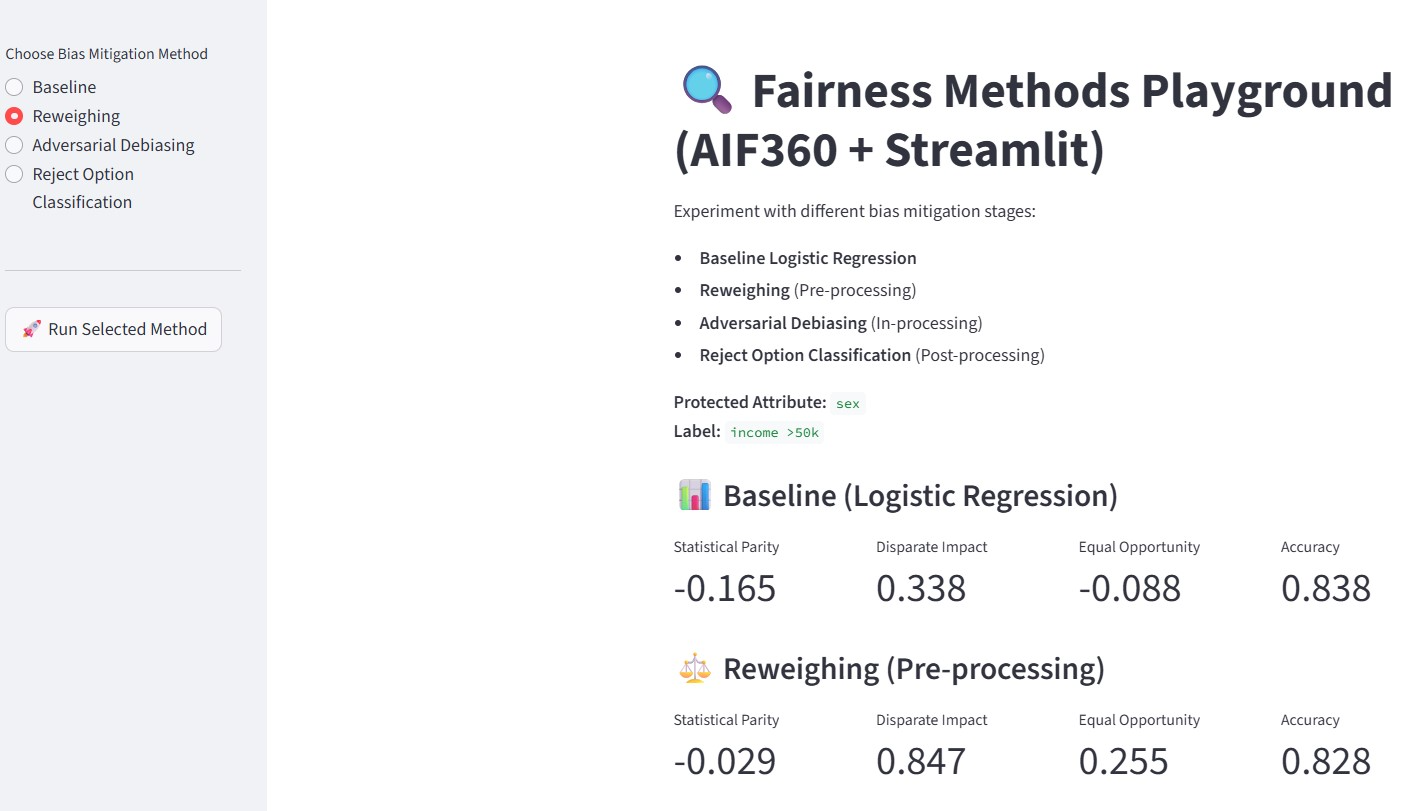

Bias mitigation can occur at three stages of the ML lifecycle.

Pre-processing (e.g., Reweighing): Adjust the training data so that underrepresented groups are given more weight. This helps the model learn a more balanced representation. See how AIF360 does this.

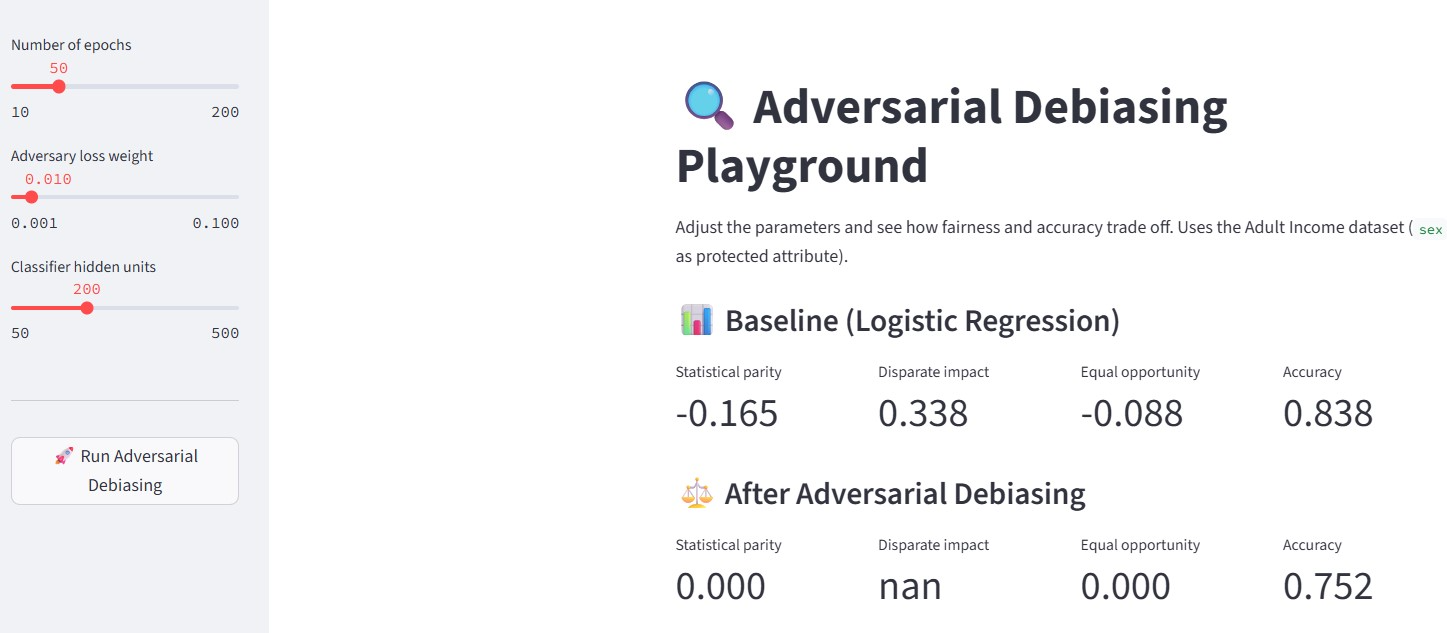

In-processing (e.g., Adversarial Debiasing): Use a dual-model setup — one classifier, and one adversary trying to detect the protected attribute. The classifier is penalized if the adversary succeeds, encouraging it to ignore bias-relevant signals. See this example from clinical domain.

⚠️ In our experiments, this method sometimes over-penalized, leading to trivial outputs (e.g., the model predicted the same class for everyone). Fairness metrics like disparate impact showed NaN or 0.000 — signs that the model collapsed under adversarial pressure.

Post-processing (e.g., Reject Option Classification): After predictions are made, decisions near the confidence boundary are flipped in favor of the underprivileged group. This is pragmatic and useful when retraining is not feasible.

🧪 Use streamlit to do the experiments

Note the above outputs I generated using streamlit.

Streamlit gives AI engineers a tight feedback loop — something critical when experimenting with fairness trade-offs.

With Streamlit:

You can visualize fairness metrics in real time.

You can tweak hyperparameters via sliders.

You can simulate counterfactuals with dropdowns.

And you can immediately see how your model’s accuracy and fairness change.

Instead of the traditional “edit code → rerun → replot → repeat” cycle, you get interactive, real-time control over fairness experiments — ideal during the development phase.

✅ Closing Thoughts

Fairness in AI isn’t just about metrics — it’s about intention, design, and impact. Tools like reweighing and reject option help. Streamlit enables fast iteration. But ultimately, it’s your job to understand the data, question model behavior, and ensure ethical AI outcomes — especially when the stakes involve people.