Guardrails refer to a set of rules, policies, and automated checks that are designed to keep the software application safe, secure, and aligned with best practices. This can apply to both the DevOps stage of applications and runtime execution scenarios. It applies to traditional software engineering, classic AI, and generative AI applications.

In traditional applications, we have static code analysis that checks the code as it is being written for potential bugs, vulnerabilities, and best practices. Within the applications it is common for code that calls APIs or functions to check all input parameters before calling. And then we also have try..catch exception handlers, and code that checks if the return value from the API or function call is formatted correctly and in the allowed range.

We also have guardrails in DevOps such as automated builds that get triggered if all smoke tests run fine, else it triggers alerts to the coding team.

Static code analysis is an example of active guardrail, while well documented APIs with clear boundary is an example of passive guardrail. Both are useful. Active guardrails give immediate feedback, they are often automated, and also decrease the cognitive load on the developer. Passive guardrails are non-intrusive and guide developers to the best practices of building solutions.

AI systems such as foundation models use natural language input and outputs, which are more open-ended and fuzzy in nature. So it is possible to engage in interaction with these models and do unwanted things. Since these models are built on vast amounts of internet scale data, we also have a larger surface area for mischief. Many examples of jailbreaks and undesirable business outcomes due to bad behavior by chatbots is coming out in the news.

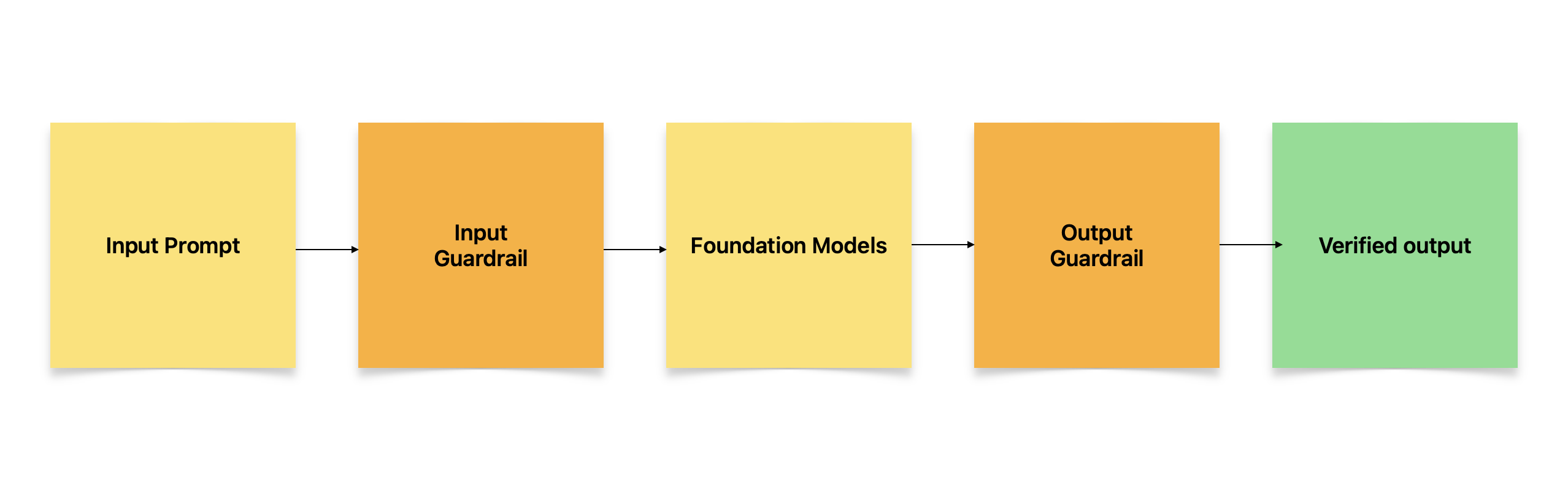

Guardrails in AI systems would intercept the input going to the models and the output coming back from model, and then apply the specific rules to validate if the content meets your organizations policies and guidelines on usage. This is akin to a railway track that ensures that the train is on a safe trajectory, cannot fall off the path or collide with another train.

Modern Deep learning systems are like black box, and we don’t know how it arrives at certain answers. In this case the guardrails can ensure that outputs always fall in a known range, thereby mitigating the unpredictability in the output values.

Guradrails can also see if a small change in input to a model is causing a wild swing in output, and alert against models that are unpredictable.

In the area of Generative AI and Foundation models this becomes even more important as these can do a very wide range of tasks and have been trained on vast amounts of internet scale data. This is both a good and bad thing, as with all this power comes the increased opportunities for misuse. Lot of examples of jailbreak is out there on the web, where models have been tricked to give dangerous outputs, like hot-wiring a car or steps to make bombs at home!

There are also examples of chatbots gone rogue and agreeing to deal with customers that were not even endorsed by the organization, thereby leading to business losses. In another example, some lawyers used content generated by ChatGPT in legal cases, and later it was found that this content was result of hallucination by model, and not valid.

Multiple guardrails will be needed in Generative AI. Although most models have some built-in safety mechanisms, it is still not enough as a typical use case may end up using multiple models. So application level guardrails need to be built outside of the models for consistency in all interactions.

Few examples of guardrails as follows.

You could have a filter for harmful and toxic language, so if such content is detected in the user’s input or model’s output then the guardrail will not allow it. Instead it will use a safe language and say that such language is against company policy.

Chatbots also may need to restrict conversations to only relevant topics so they don’t veer off into unsafe topics or unregulated territory. For example, let’s say you have a chatbot that acts as a fitness advisor for gym goers. Now this bot may be allowed to create exercise schedules based on user’s context, however it cannot recommend nutrition supplements or medicines that have material impact on the user’s body.

PII (Personally identifiable information) may need to be blocked or redacted based on use case. Let’s say our fitness chatbot wants to create a summary of a gym goer’s interaction. In this case the text summary should not have any personal information revealed.

In case of a healthcare chatbot, guardrail may detect when it is appropriate to call a human Doctor and what type of guidance can be full automated.

Many Generative AI scenarios may even need multiple guardrails running simultaneously. For example, I may ask the fitness bot to give me another customers phone number in an intimidating tone. So here guardrail for privacy and toxicity may trigger together.

All the alerts and guardrail actions would typically be logged for future audit purpose and to strengthen the AI applications.

NeMo Guardrails from NVidia and Guardrails AI are some popular open source packages available as toolkits for developers to explore when building LLM applications.

Modern applications built on emerging technologies create more points of unpredictability and risks clearly. So, as good software engineering practice, we will do good to start off by applying the same validations we use in traditional applications and extend it to the new types of AI applications. This is just a start, and more support from vendors and ready to use guardrails for new types of risks will emerge as we uncover more unknown unknowns!