In my previous post I had shared details of setting up local GPT models and using that for applications like Q&A systems.

Here I take the same local GPT application and enable detailed observability of its excution using a tool called LangSmith , for which I was granted early access by its creators.

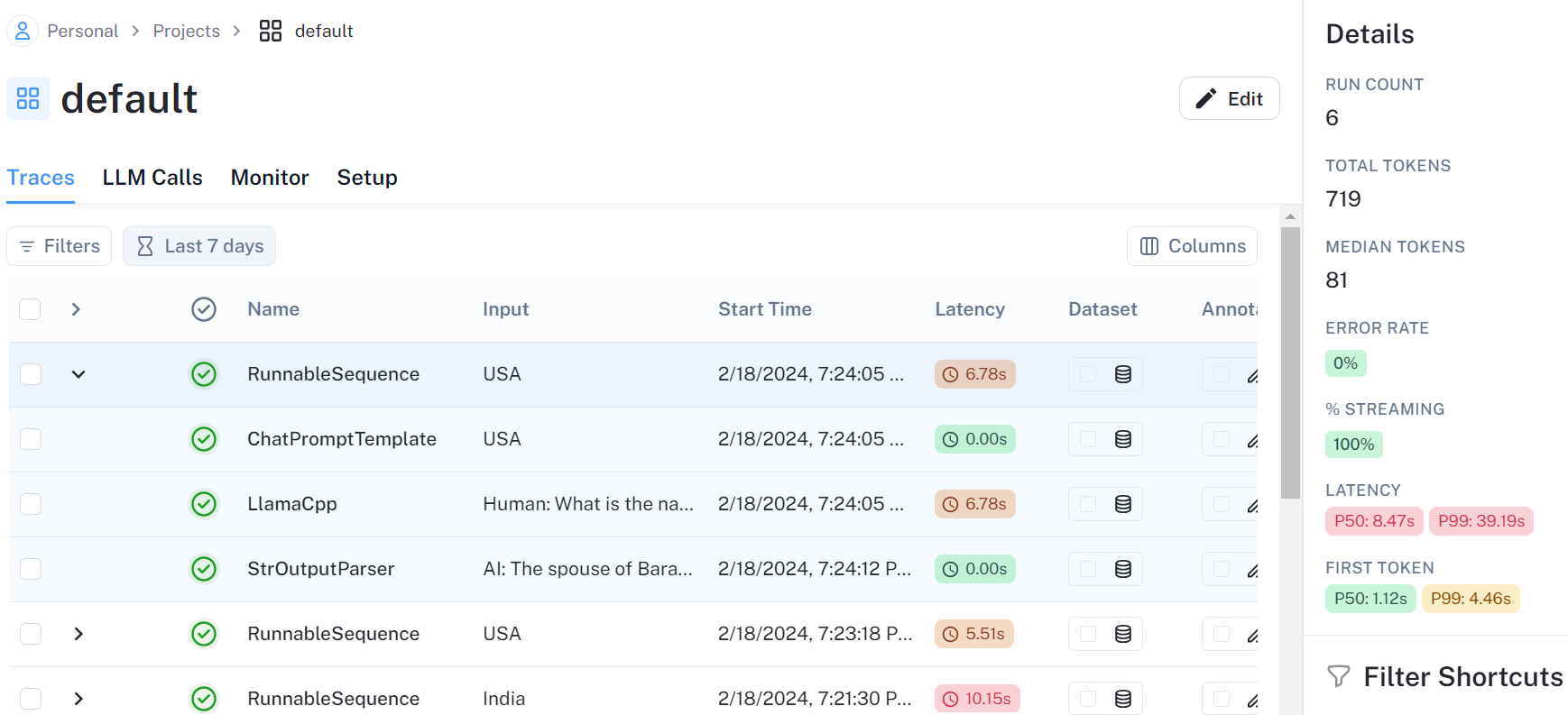

LangSmith - part of the LangChain framework - is an observability tool for managing and monitoring LLM (Large Language Model) calls originating from your applications, as well as the responses that are retrieved.

To activate this tracing with LangChain all you need to do is set the following environment variables.

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_API_KEY=<your-api-key>

LangChain applications log data to LangSmith cloud, and it is easily viewable in a web UI for developers.

Run count, latency, input tokens and output tokens are traced and displayed for every run.

As soon as you run the application, immediately the trace of the LLM apps are logged to both console and web server. This real-time feedback loop can be immensely beneficial in understanding the application’s interactions with the LLM models.

In my experimentation, I utilized the small model Microsoft Phi2 locally. However, transitioning to a proprietary model like GPT-4 would undoubtedly incur additional costs for each query. This is where LangSmith observability can be very useful for DevOps. By leveraging LangSmith’s capabilities, Dev team can closely monitor the growth of retriever token sizes for each query, thereby pinpointing the queries or prompts contributing most significantly to cost escalation.

The other area where this can be useful is testing. A slight modification to prompt or input context can significantly change the token usage- so regression testing charts can be generated from LangSmith output and entire Dev team can be notified on a slack channel to take action.

It clearly has a seamless integration with DevOps, real-time monitoring, easy filtering by features such as token sizes or latencies- thereby empowering Developers to optimize their LLM-driven applications.

LangSmith scan be a vital tool for developers looking to harness the full potential of these powerful models while mitigating associated costs and latencies. With LangSmith by their side, developers can navigate the LLM landscape with confidence, knowing they have the tools and insights needed to optimize their applications for success.