Generative AI applications such as Large language models(LLMs) are different from traditional AI in their scale, complexity, and non-deterministic nature of outputs. A traditional AI model doing something like classifying “Cats versus Dogs” or sentiment analysis is relatively deterministic in its accuracy. However, the new breed of LLM applications may give different result for same input to a given user or different users due to the complexity of the neural network, vast amounts of data, and the temperature settings used when generating outputs.

While the non-deterministic nature of output gives serendipity to user experience, it can also cause frustration and confusion.

So a human-centric design thinking for the UX becomes very important. Most teams get excited about which model to use and what data can be leveraged for vector embeddings and retrievals, or how prompt engineering can be used in different ways.

While these are important, it is better to start with the end user in mind and how they will be interacting with the application. UX must be transparent about what the Generative AI can do, what it cannot do, and let users have control on how to integrate it as part of their flows.

UX cannot expect the user to be a prompt engineer. So, it would be an anti-pattern to just give a text box where user can type any natural language text and that is sent to a LLM for response.

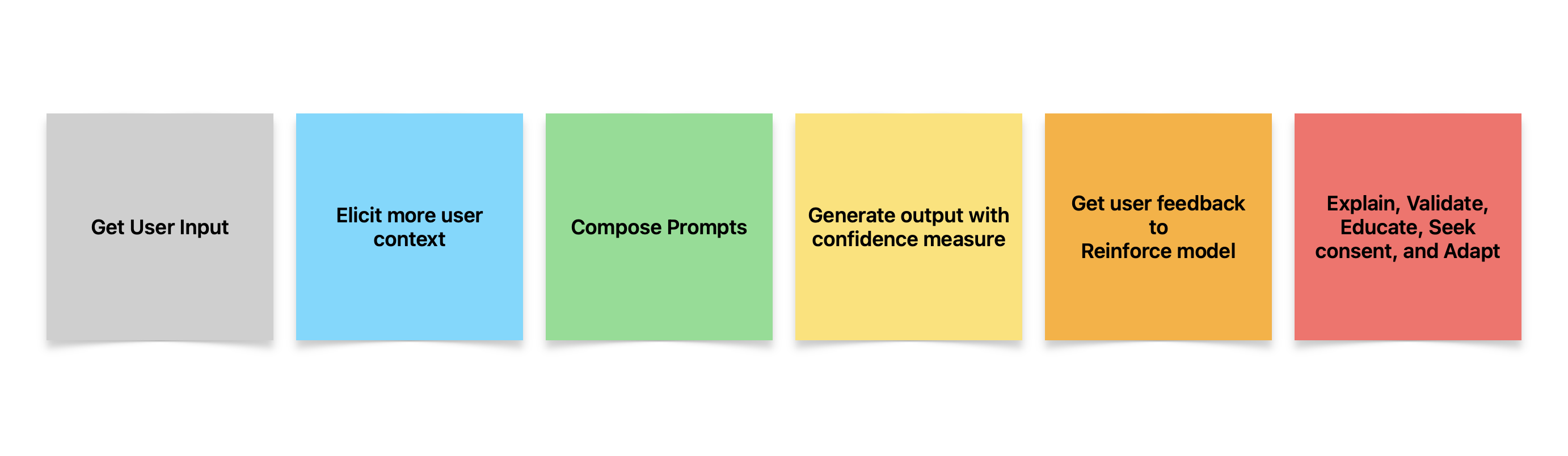

Instead UX should provide a lot of intermediate steps between the user input and model output. This could be as simple as breaking down the user’s input to its constituent parts and clarifying the user’s intent in better detail.

For example, if I tell the LLM - “Generate code for doing bubble sort” - then the UX could clarify data type and length of input, coding language to use, coding style- procedural or object-oriented, intention of the use case etc. User should be able to click and select these context elements, and behind the scene the system should automatically create the optimal prompt and get the answer.

As in the case of inputs, even the outputs can be progressively disclosed. For example, in the above generation of sorting code, the system could generate each line of code at a time versus the entire function block. This will give the user an opportunity to validate a smaller size output and avoid getting overwhelmed by a large block of code. This is also useful because many times the output such as code may not compile or even function correctly. In such cases if the output is disclosed progressively then the user can validate step by step and intervene faster versus get confused by a larger set of code outputs.

Code writing is very smooth with LLMs, however code reading by the human can be very challenging as this is similar to taking over someone else’s code. Software engineers who maintain other’s code know the frustrations when they have to maintain code written by another person. Thoughtful UX design can help alleviate such user challenges.

It is also good UX practice to show the confidence levels visually. This can be numbers and charts, to illustrate how confidence level changes over time or with different inputs. UX should also alert users when confidence level goes below a certain threshold, based on the tasks. This way they know when a result is not reliable. Additionally, UX can allow user to click on a confidence value and get tips how to improve certainty of the model.

Educational pop-ups or tool tips can also give byte sized learning to users on what Generative AI is all about, in simple terms that non-technical person can understand.

UX should also clearly communicate how the user data is stored, processed, and used. Users may also be given ability to adjust their privacy settings, similar to how websites ask whether user data can be used for analytics or improving performance etc.

Temperature setting of models can also be automatically changed to get serendipitous responses from UX, and check how that affects user sentiment.

Simple Thumbs up/down and star ratings for outputs in UI can enable reinforcement learning of the model using human inputs, and this will improve user experience in next iteration of model retraining.

Allow users to also play with novel aspects of underlying LLMs such as adopting a personality - professional, friendly, or informative.

Specific niche domain UX may also be better than generic UX that tries to do multiple tasks.

When the underlying models are complex and big then there could also be concerns around user latency. This is important to evaluate, as high latency can cause users to lose interest and drop off from using the product. Smaller models with more focus are gaining popularity now, as these can be more tractable for ensuring accuracy, explainability, and thus more responsible Generative AI with an enjoyable user experience.

In conclusion, UX design for LLM applications is as important as data preprocessing, model selection, and prompt engineering- so users continue to enjoy the experience and this directly translates to product success.