What is MCP?

Model Context Protocol (MCP) is an open standard from Anthropic for connecting AI assistants to data including content repositories, other systems where data resides, business tools, and development environments.

Imagine you have “N” applications (chatbots, coding assistants. or data analysis tools). And you may have “M” different external tools or data sources (DBs, File systems, services like Github etc.). Now if you want the “N” applications to use each of the “M” tools then we have a “N x M” total integrations. This is a developer’s nightmare.

With MCP, you create “N” MCP clients (one for each application) and “M” MCP servers (one for each external source or tool) that adhere to a standard protocol exposing its capabilities to any compliant MCP client. Now the total number of implementations is “N + M”, as underlying MCP will do the USB style plug and play for these apps with tools. This elegant approach has replaced fragmented integrations.

Architecture of MCP

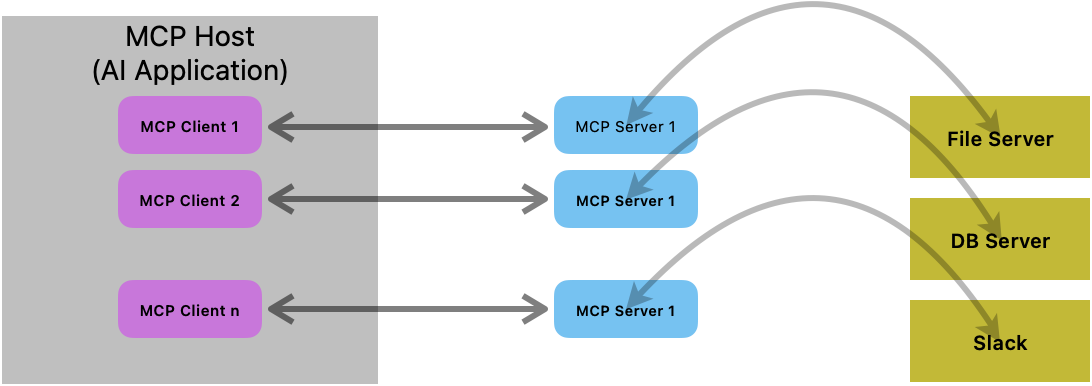

The architecture is centered around the interaction between an MCP host, MCP clients, and MCP servers.

MCP Host: This is the AI application that the user directly interacts with (e.g., a chatbot, a coding assistant).

MCP Client: A component within the host that handles the communication protocol. Each MCP client can communicate with one MCP server at a time.

MCP Server: A service that acts as an intermediary, translating MCP protocol requests into commands for a specific external resource (e.g., a database, a file system, a Slack server).

The MCP server gets the contextual data for the MCP client. An MCP client is designed to handle one direct communication with one MCP server at a time, but an MCP host (the AI application) can have multiple MCP clients, allowing it to connect to multiple different servers and resources as needed.

The protocol uses JSON-RPC 2.0 for the data layer, which handles the messaging format. The transport layer, which can be STDIO for local processes or streamable HTTP for remote interactions, handles the communication details. The protocol is stateful, using a lifecycle management process for initialization, capability negotiation, and ensuring version compatibility.

Primitives of MCP

MCP primitives are a key idea here, which define what clients and servers can offer each other. Servers can expose Tools, Data resource, and reusable prompt templates. These primitives also have methods for discovery, retrieval, and execution by the client. Likewise a MCP client can also expose capabilities to a client.

These primitives are very important as they dictate how the sharing happens between servers and clients, the type of information that can be shared, and the range of actions that can be performed. Clearly some of the Responsible AI aspects such as agency limits can be enforced here.

Server primitives may require user consent before execution so users are in control. So the model controls the tools with multiple mechanisms of human oversight as follows.

a. UX display to ask users which tools need to be configured.

b. Approval dialogs that ask user to approve the tools for a given workflow.

c. Prompts, model selection, token limits, AI responses can all be reviewed by user before returning to server.

d. Users can redact sensitive data, auto-approve trusted operations, and validate risky actions.

e. Settings for pre-approved safe operations.

f. Activity logs for later review.

Here we can see Responsible AI principles of transparency, explainability, and human-in-the-loop controls in action.

Clients also support multiple primitives such as sampling, roots, and elicitation.

Sampling allows MCP servers to request LLM completion through the client, to enable agentic flows. MCP client is in full control of user permissions and security here. It is also a cost saving feature as the server is reusing the AI capability of the client to further its flow. Sampling respects user autonomy and can issue warning if any suspicious requests are detected, thereby aiding AI safety. AI can also be used to evaluate complex trade-offs when deciding by the tools, and then present the recommendations to users before finalizing.

Roots is the mechanism by which MCP clients specify access control and security boundaries to MCP servers. For example, instead of giving unrestricted access to entire file system, it may allow only certain folders and further certain file types within these allowed folders. This can significantly improve the security posture of the agentic flows.

Elicitation is a client primitive through which the server can request specific information from the users, in order to complete the workflows. For example, server may incrementally access the information it needs versus get all the information upfront. It can also pause and resume the flows. So this minimizes data use to only those points when its needed, thereby improving resource efficiency. User can also specify time windows when the grid intensity is low on fossil fuels, thereby enabling carbon-aware computing. This can be a good step towards sustainable agents.

Benefits of MCP over home-grown systems

Home grown systems are inherently vulnerable due to the complexity in implementation introduced by lack of standardization such as that offered by MCP.

Private un-vetted systems are more likely to contain security flaws that would be found quickly in an open-source, community backed standard.

Home grown systems also tend to have fragmented security posture and potential compliance nightmares.

MCP brings in unified security model, built-in safety guardrails as part of the protocol’s core design such as human_approval_required and audit_level that aren’t just an afterthought. It also reduces the attack surface with a standardized communications protocol, and most importantly being an open standard with community backing, constant testing and refinement, making it more resilient to new attack vectors. In addition to all these benefits, users can also set limits on resource usage, control costs, and promote greener compute that is carbon-aware in execution.

In conclusion In essence, MCP transcends a simple API specification; it represents a fundamental shift toward a more interoperable, secure, and responsible ecosystem for AI agents. By providing a standardized language for communication, MCP drastically reduces the complexity of integrations, enabling a future where AI assistants can seamlessly and safely interact with a vast network of tools and data sources. This open standard fosters innovation, enhances security through shared best practices, and places the user firmly in control, ensuring that as AI becomes more capable, it remains transparent, accountable, and aligned with human values. MCP is the blueprint for a connected and safer agentic future.