Recently I was pondering over some latency challenges while implementing Responsible AI guardrails, and decided to wear an Architect hat.

Guardrails are very relevant for user and business safety, and yet they can introduce some architectural trade-offs that one must evaluate carefully.

First one is computational latency. Imagine you are building a chatbot and every question and answer has to go through a guardrail. Now this is going to add to response time, and the user experience can suffer.

Next is resource utilization. More compute means more energy use, and some of these guardrails use LLMs to check other LLMs, meaning you are talking fairly significant inference energy use, especially when you have lot of concurrent users hitting the LLMs.

Then we have complexity of Gen AI solution itself as now one needs to factor the development, testing, deployment, and support of these guardrails.

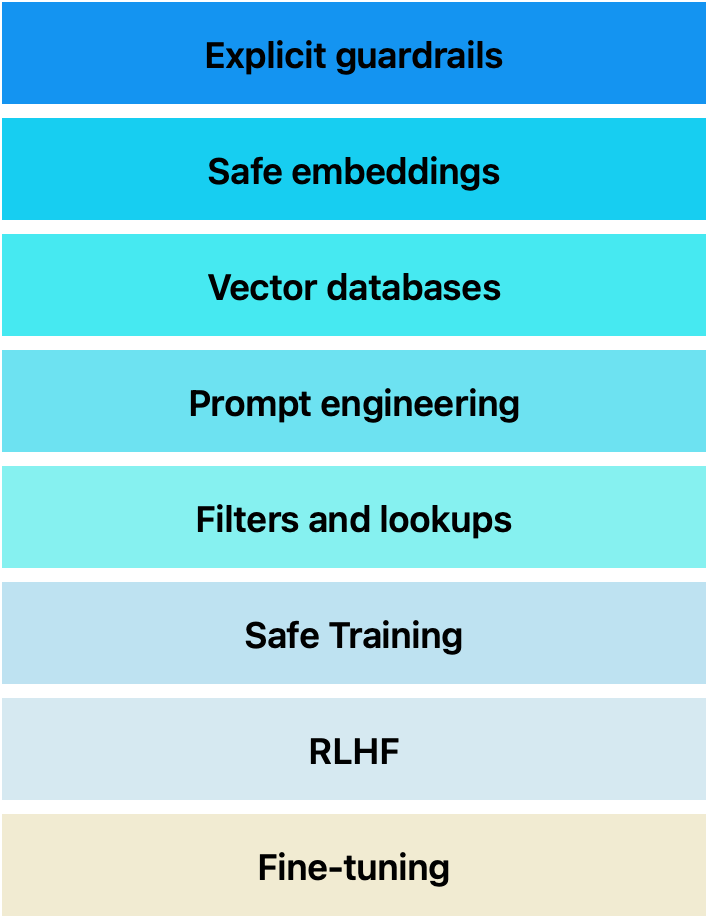

So drawing on ideas from traditional cyber-security we can think of securing LLMs using a spectrum of techniques starting from the innermost core of model and all the way to perimeter security guardrails on the far external boundary near the load-balancer, and a myriad of other techniques in between.

A high risk use-case may need all these layer protections to guard against risks such as hallucination, bias, prompt injection, and misuse. Whereas, medium or low-risk use-case may choose only a subset of all these techniques and thereby optimize on other NFRs such as efficiency and latency.

Layer 1: Model level defenses (innermost layer)

Fine-Tuning for Domain Appropriateness One of the strongest safeguards is ensuring that the model itself is aligned with responsible AI principles. Fine-tuning on domain-specific, high-quality datasets can:

- Reduce harmful biases.

- Align responses with ethical standards.

- Improve model reliability in sensitive applications (e.g., healthcare, finance).

Reinforcement Learning from Human Feedback (RLHF) Leveraging RLHF further refines model outputs by incorporating human oversight. This approach helps:

- Discourage toxic or misleading responses.

- Encourage factual accuracy and adherence to norms.

Training Data Curation The quality of training data directly impacts model behavior. Ensuring diverse, well-labeled, and unbiased training data minimizes the risk of:

- Embedded biases.

- Misinformation propagation.

Layer 2: Prompt Engineering & Input Preprocessing

Structured Prompt Engineering Crafting well-structured prompts can guide the model towards safer, contextually appropriate outputs. Techniques include:

- Chain-of-thought prompting to encourage step-by-step reasoning.

- Instruction tuning to constrain responses.

- Role-based prompting to enforce responsible behavior (e.g., “You are hospital chatbot to answer admission questions only…”).

Input Filtering & Lookups Implementing basic filters to detect unsafe input patterns can prevent:

- Malicious prompt injections.

- Leaked sensitive information. Using predefined lookup lists (e.g., blocklists for hate speech, personally identifiable information) adds an extra layer of security.

Layer 3: Embedding-Based Safeguards

Embedding models capture semantic meanings and can be leveraged to:

- Filter toxic content before feeding data into an LLM.

- Detect anomalous queries that deviate from expected behavior.

- Validate output safety by checking against gold-standard embeddings. For instance, contrastive learning can be used to ensure that an LLM’s generated responses align with known safe responses.

Layer 4: Vector Database Safety Enhancements

Vector databases store context-aware embeddings for retrieval-augmented generation (RAG) but can also introduce risks (e.g., hallucinations, incorrect retrieval). Mitigation strategies include:

- Semantic filtering to exclude unreliable context snippets.

- Confidence scoring to prioritize high-certainty sources.

- Red teaming vector queries to proactively test for vulnerabilities.

Layer 5: Explicit Guardrails & API-Level Defenses

LLM-as-Judge or Agents for Validation Deploying a secondary LLM to verify responses before final output (e.g., OpenAI’s Moderation API) can:

- Catch toxic or misleading content.

- Ensure compliance with regulatory constraints.

API Gatekeeping & Rate Limiting Enforcing API-level controls helps prevent abuse and denial-of-service attacks by: Setting usage quotas based on risk levels. Implementing real-time monitoring for anomalous patterns.

Layer 6: Adversarial Testing & Continuous Monitoring (Outermost Layer)

Since LLMs exhibit emergent behaviors, it is impossible to guarantee 100% safety. A proactive approach involves:

- Simulated adversarial attacks to test for vulnerabilities.

- Continuous audits and logging for transparency and explainability.

Few things can be done to mitigate the tradeoffs arising from these defense techniques which itself aims to mitigate the AI safety risks.

- Not all the above techniques have to be run for every use-case. You should test your usage patterns and only choose the 20% of these techniques that give you 80% cover first. Rest can be introduced after monitoring actual telemetry data from application use.

- Some of the frameworks like Nemo Guardrails allow you to execute the validations in parallel using Async execution versus sequentially by blocking LLM, so this can help in reducing LLM response latency.

- Similarly, the output response coming from LLM can also be streamed chunk by chunk, and the validation can happen incrementally versus waiting for all tokens to be ready before validation.

- Resource efficiency of the guardrails can be tested in pre-production along with efficacy of blocking harm, and you can prioritize the least intensive, simple techniques that give good results over the more complex ones.

- Finally, as the LLM technology keeps evolving experiment with advanced techniques of LLM-as-judge, highly targeted agents-as-validators etc. and see if they bring higher accuracy along with other benefits.

Consider a bank deploying an AI chatbot for customer support. A defense-in-depth approach might include:

- Fine-tuning the model on financial FAQs and regulatory guidelines.

- Prompt engineering to ensure it never provides financial advice.

- Input validation filters to detect fraud-related queries.

- Embedding-based safety checks to block toxic language.

- LLM-as-a-judge system to validate high-risk queries (e.g., “How do I launder money?”).

- Continuous monitoring for adversarial prompts.

By balancing these layers, the chatbot remains responsive while mitigating risks.

In summary:

Defense-in-depth is essential for securing Gen AI applications. Depending on use case risk levels, developers must select a subset of these safeguards to balance security, performance, efficiency, and scalability. While no method guarantees absolute protection, a layered approach significantly reduces vulnerabilities, ensuring responsible AI deployment.