In the previous posts we have seen how LangChain can be used effectively for building LLM based applications, and also how libraries like PandasAI can be even used to do data engineering tasks using natural language interactions. In this post we will dive a bit deeper into some very powerful concepts of prompt templates, chains, and agents that are supported by LangChain.

Prompt is typically composed of :

- Instructions to the LLM on WHAT to do, HOW to go about it, and expected outputs.

- Context such as custom documents, external database information, or API data sourcing.

- User input used to query the model.

- Output indicator to mark the beginning of LLM return values.

As a good Software Engineering practice, we should not hardcode these values. This is where the concept of PromptTemplate comes to the aid.

In its simplest form PromptTemplate contains a parameter for input variable and another parameter called “template” that has the question embedded with the variables that can be substituted at runtime.

PromptTemplate = FunctionOf(input variables, template string) .

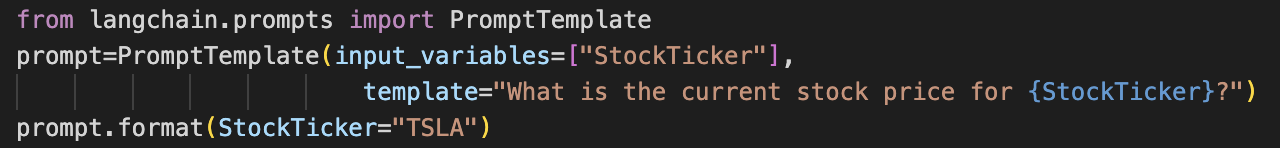

An example of a stock ticker as a variable and its price is as below:

The above input variable list can also have multiple comma separated values if needed.

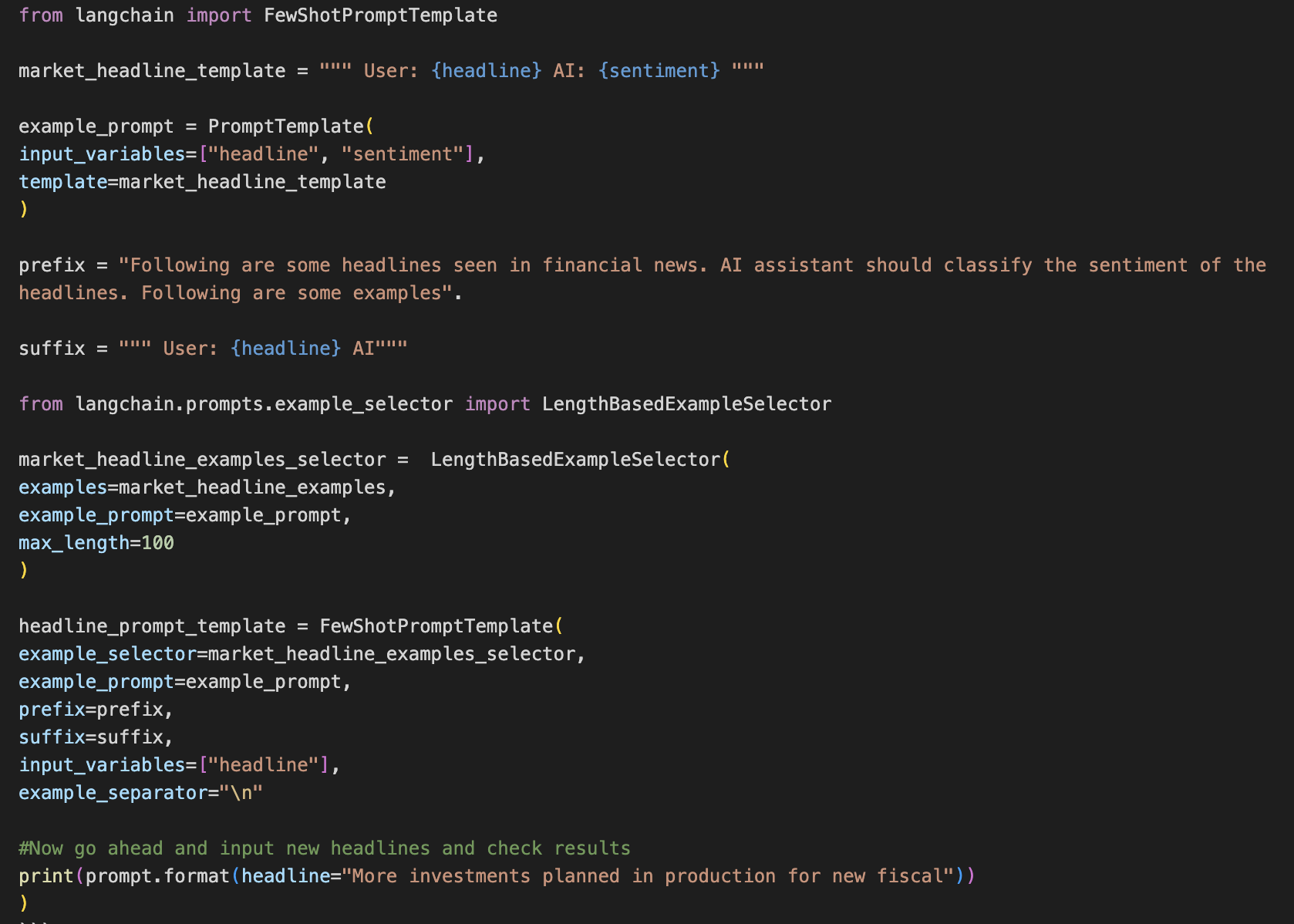

A more advanced version of the template can be “FewShotPromptTemplate”.

Let’s say you want to classify financial news as good or bad.

Unlike generic sentiments, here there will be stock market specific entities and terms and some examples will help the model.

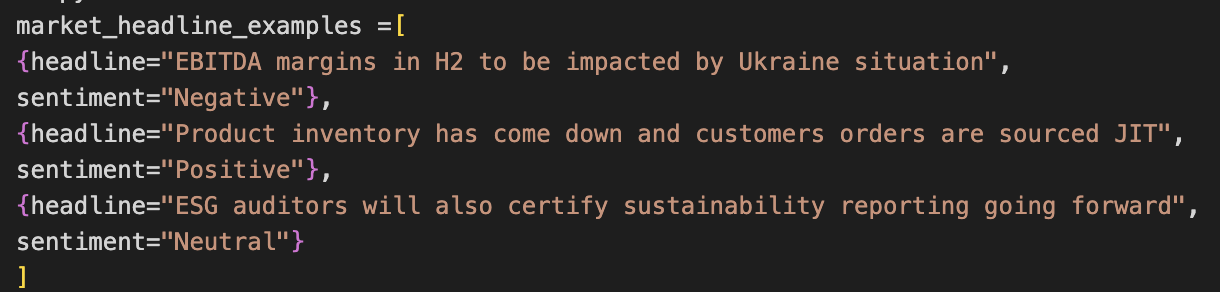

First we make a list of examples.

Given the cost implications of input sizes, we can also use a concept called “LengthBasedExampleSelector” to limit the token sizes that we pass in few shot examples.

Further the above prompts, templates, and examples can also be shared by creating reusable libraries targeted to various types of tasks such as financial news, e-commerce marketing, product sales campaigns etc. Example : Hero Prompt Library .

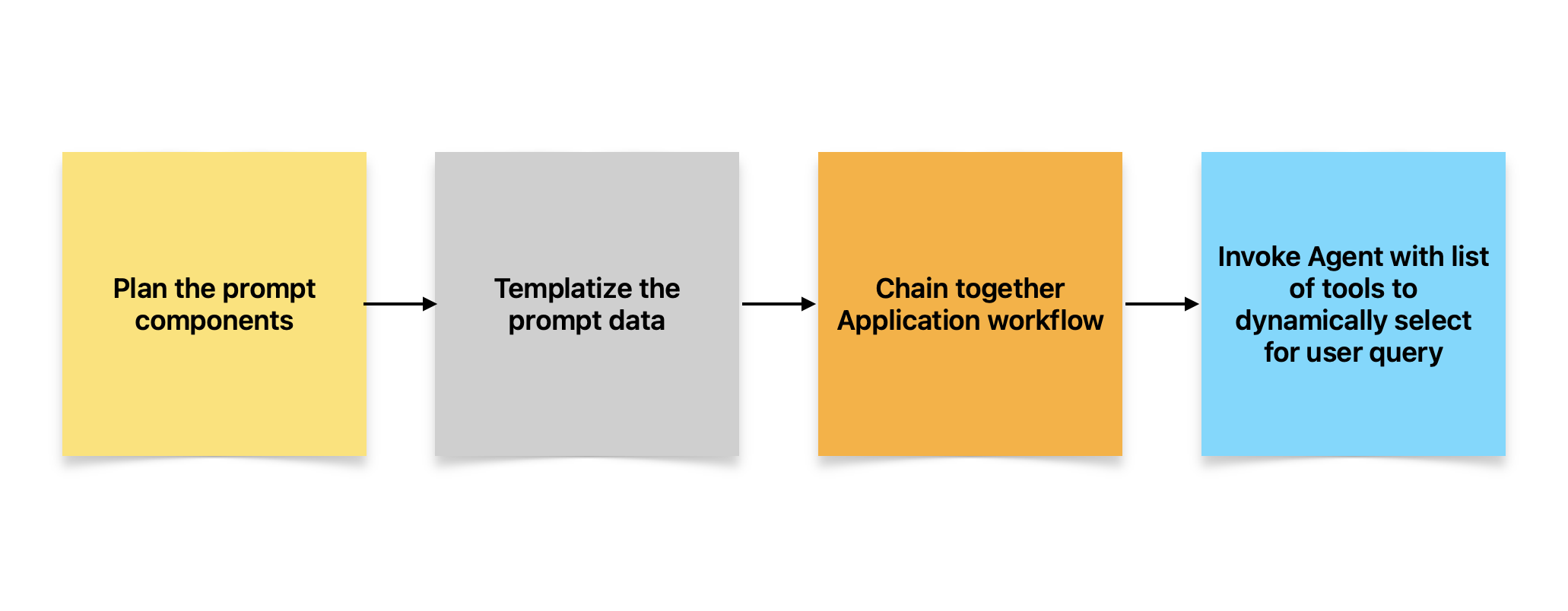

Prompting above can also be combined with Chains. Chains are a mechanism to combine multiple components as a sequence of calls. For example, a chain could take user input, another could query a database table, and a third could get some API response and the concept of chains will allow all of these to be combined into a coherent application flow.

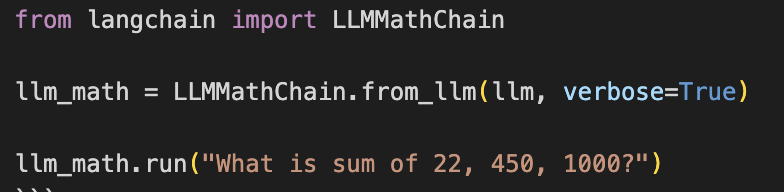

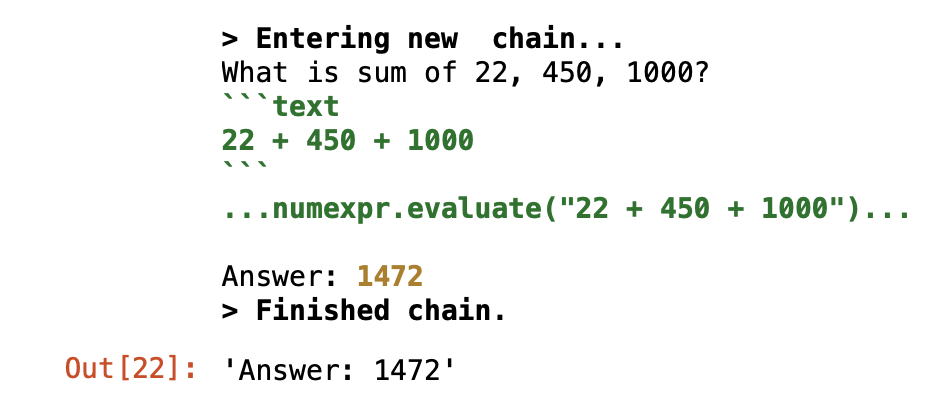

Below is an example of how a simple chain can combine with prompts.

And this outputs:

So here we have the model understand the query text and then extract the numbers to evaluate using the math chain component. Note that the numbers could be encapsulated in variables and combined with a PromptTemplate as shown in previous examples.

Recap the USP of LangChain:

- Data-aware: We have seen how to connect our LLM to external data sources.

- Agentic: Language models also need to interact with their environments.

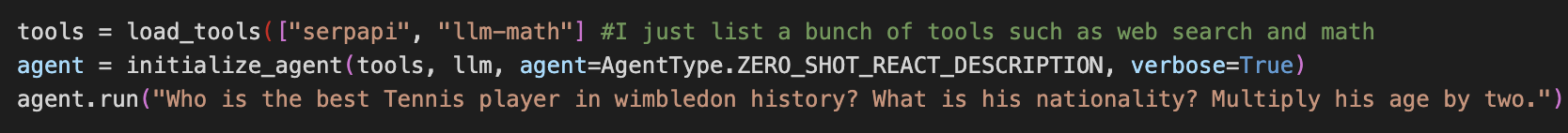

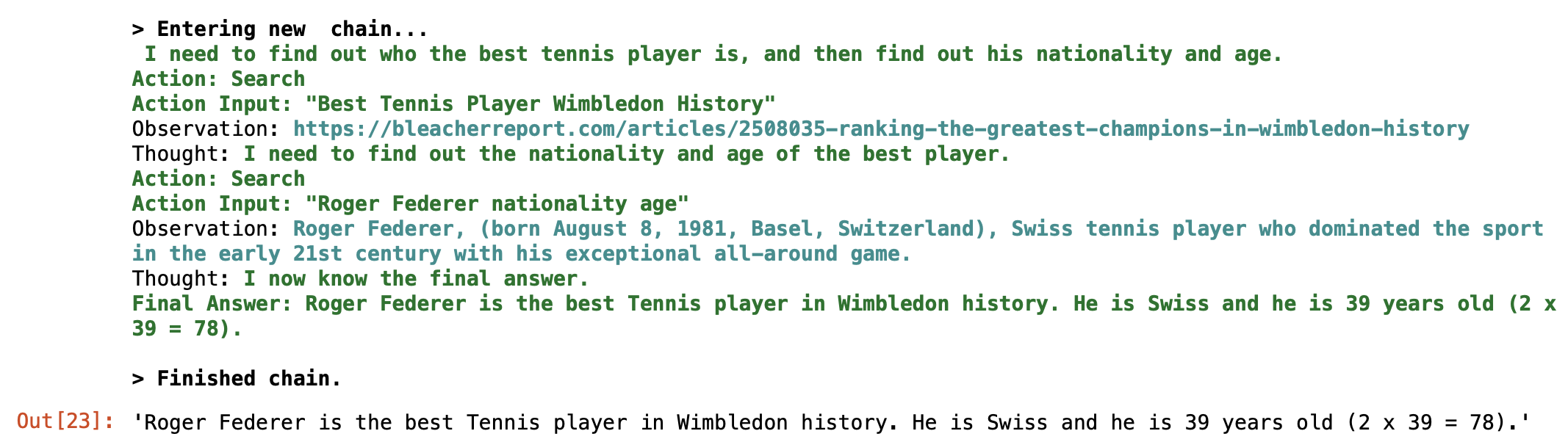

Agents are the next powerful idea as they allow the LLM to decide which tools to use at runtime without user explicitly doing the mapping.

For example, I may want to know who is the best Tennis player in history of wimbledon, nationality, and then multiply the player’s age by 2!

And this is the chain output I get, clearly identifying Fedex as the best. Note that it has judiciously selected a web site using the Google search tool and then moved on to use the Math tool to multiply age by two!

So we saw few examples of prompt templates, chaining to create pipelines, and agents to decide dynamically at runtime how to choose what tools.

This is still the tip of iceberg, we can go deeper into rabbit hole of nuances in these concepts, although that is for another day!

For full archive of my posts, visit : The Pipeline

Credits:

LangChain documentation site