In my past articles I had shown how to do text-to-text chat apps and even query tabular data using LLMs. In this post we will explore how to use Prompt Engineering to generate neural network code, that can be used by a beginner to learn deep learning and neural net concepts better. The key to the code generation and experimentation is in designing good prompts and then following up iteratively to answer the next set of logical questions and gaps in output.

Prompting journey and outputs

My journey began with a simple prompt to GPT:"Generate code demonstrating the simplest neural network architecture. Comment the code so the concept becomes clear to a newbie to Neural nets."

Here it generated code that failed to execute due to compatibility with my pyTorch version. GPT-4 also randomly created a meaningless dataset with positive and negative labels.

Prompt 2: "Search the web and find a meaningful dataset. Note that I have Pytorch 2.0.0 . "

At this point it updated the code to download Pimas Indian diabetes dataset to illustrate disease prediction based on lifestyle factors. The compatibility issue was also sorted, however the code still had a bug in a line.

In prompt 3 I just pasted the error and asked GPT to fix it. It did and the code executed fine!

So you can see that this interaction for code generation by nature is iterative. Also try to get the code to work as soon as possible in the simplest version, else debugging can become a nightmare.

Next, think like incremental evolution of the code architecture and features. Just 1 simple step at a time, else it can easily confuse the GPT4 without giving it time to think. Try to be prescriptive even on how it should layout the code following your comments as context in prompt.

Prompt 4: "Now print comments in output that show steps such as downloading and preparing input data, fixing the neural net architecture and its activations, running gradient descent, creating model, and then testing the model on unseen data."

Now the execution of the code was much more understandable!

I decided to take it up a notch now.

Prompt 5: "Inject explainability into the code above and generate outputs in natural language for user to interpret."

Output as below:"The model was trained on the Pima Indians Diabetes Dataset. The dataset has 8 features (Number of times pregnant, Plasma glucose concentration, Diastolic blood pressure, Triceps skin fold thickness, 2-Hour serum insulin, Body mass index, Diabetes pedigree function, Age) and the model predicts whether a person has diabetes or not.

The accuracy of the model on the training data is 49.22%

To add some level of explainability to the model, a Random Forest model was also trained on the same data to determine the importance of each feature.

The importance of the features according to the Random Forest model are:

Number of times pregnant: 8.18%

Plasma glucose concentration: 24.01%

Diastolic blood pressure: 8.72%

Triceps skin fold thickness: 7.02%

2-Hour serum insulin: 8.31%

Body mass index: 17.25%

Diabetes pedigree function: 12.83%

Age: 13.69%

Note: This is a simplified example and in a real-world scenario, more rigorous evaluation methods and more complex models could be used."

Prompt 6: "add in a module in code to illustrate any ethical concerns in Data"

Output: "One of the ways we can illustrate an ethical concern is by checking for bias in the dataset. In the context of the Pima Indians Diabetes dataset, let's say we want to check if the dataset is biased towards the age of the patients.

In this hypothetical scenario, let's say the dataset was collected from a specific area where most of the patients are of a certain age group, this could bias the model towards making more accurate predictions for that age group and less accurate predictions for others."

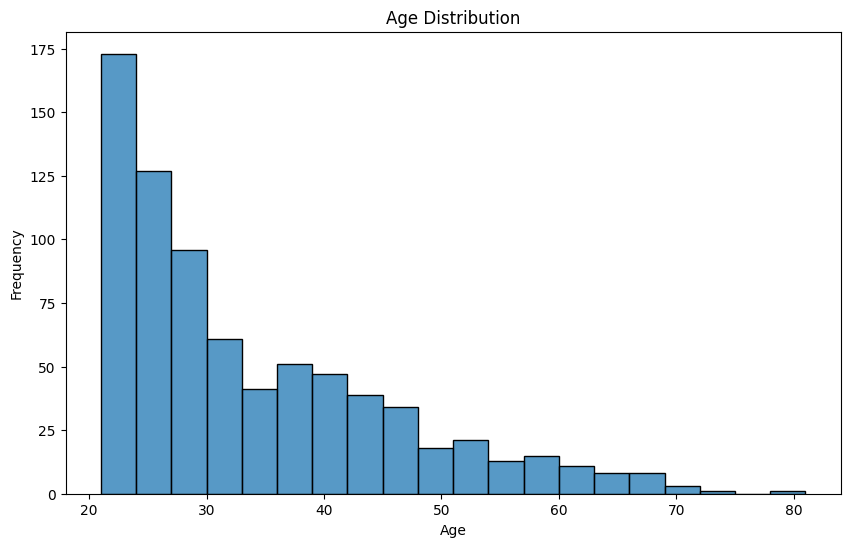

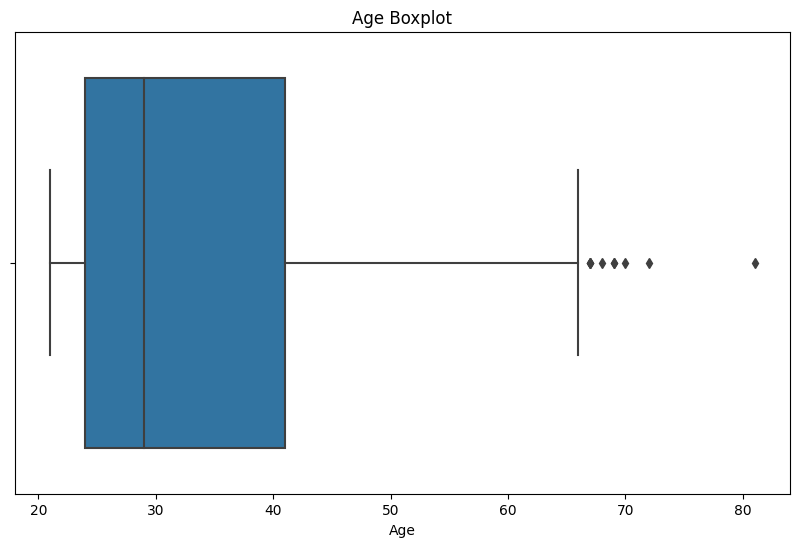

It generated histograms of age as below and we can see that majority lie in 20-40 years age as represented here!

Learnings:

- Clear communication and being precise in prompts helped me get code that was accurate and helpful.

- Iteratively asking AI follow-up questions helped the GPT to evolve the code and for me to also deepen my understanding without getting overwhelmed in lot of complex code at once.

- Sometimes GPT does not get the correct answers as it may not be aware of your environment. In this case by explicitly sharing my environment details as context I got code that resolved compatibility issues.

- Experimenting with different ways of putting the same prompt helped fine tune the resulting code.

- I used AI not only for code generation but also data analysis, visualization. More importantly I was able to leverage it as a personalized coach in my journey.

Conclusion Through iterative prompting, I was able to use an AI assistant not only to grasp the concept of neural networks but also to build a working model, incorporate explainable AI, and generate visualizations to check for bias. This approach allowed me to learn at my own pace, ask questions as they arose, and gain a deeper understanding of each aspect of neural networks.

The experience has been enlightening, and I encourage anyone interested in learning AI and neural networks to try this approach. Remember, the key to learning is asking the right questions. So, don’t be afraid to prompt, iterate, and learn!